Implementing Randomly Wired Neural Networks for Image Recognition, Using CIFAR-10 dataset, CIFAR-100 dataset

| Datasets | Model | Accuracy | Epoch | Training Time | Model Parameters |

|---|---|---|---|---|---|

CIFAR-10 | RandWireNN(4, 0.75), c=78 | 93.61% | 77 | 3h 50m | 4.75M

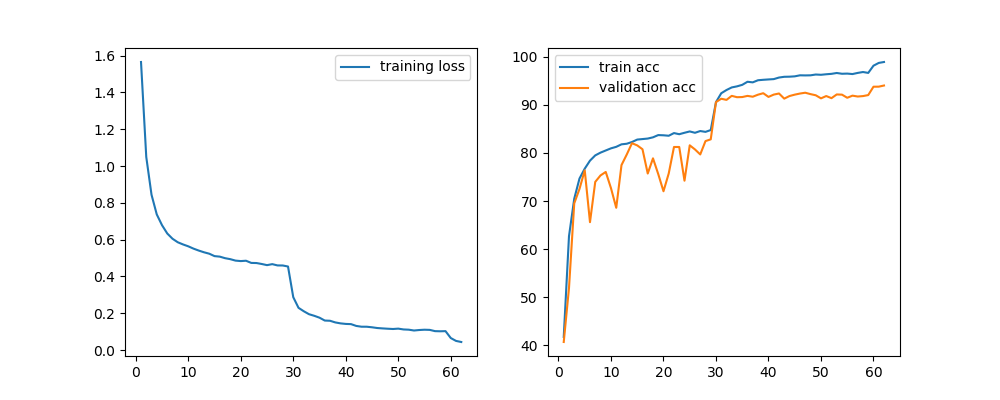

CIFAR-10 | RandWireNN(4, 0.75), c=109 | 94.03% | 62 | 3h 50m | 8.93M

CIFAR-10 | RandWireNN(4, 0.75), c=154 | 94.23% | 94 | 8h 40m | 17.31M

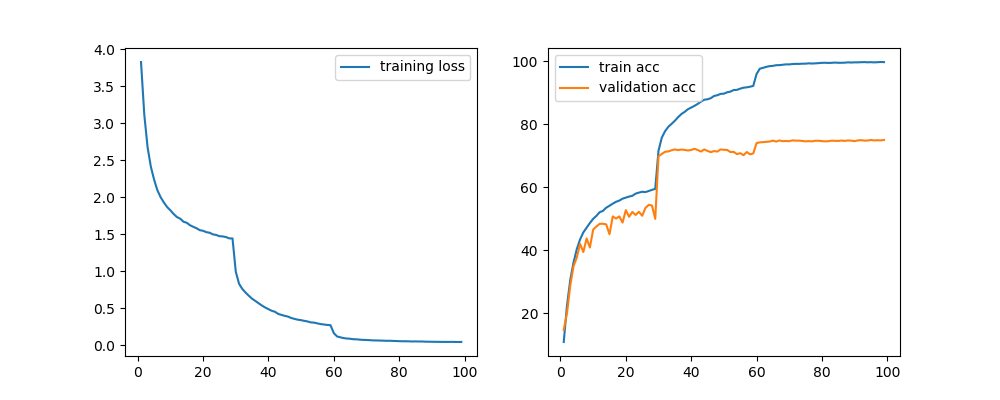

CIFAR-100 | RandWireNN(4, 0.75), c=78 | 73.63% | 97 | 4h 46m | 4.87M

CIFAR-100 | RandWireNN(4, 0.75), c=109 | 75.00% | 99 | 6h 9m | 9.04M

CIFAR-100 | RandWireNN(4, 0.75), c=154 | 75.42% | 99 | 9h 32m | 17.43M

IMAGENET | WORK IN PROGRESS | WORK IN PROGRESS

python main.py

Options:

--epochs (int) - number of epochs, (default: 100).--p (float) - graph probability, (default: 0.75).--c (int) - channel count for each node, (example: 78, 109, 154), (default: 78).--k (int) - each node is connected to k nearest neighbors in ring topology, (default: 4).--m (int) - number of edges to attach from a new node to existing nodes, (default: 5).--graph-mode (str) - kinds of random graph, (exampple: ER, WS, BA), (default: WS).--node-num (int) - number of graph node (default n=32).--learning-rate (float) - learning rate, (default: 1e-1).--model-mode (str) - which network you use, (example: CIFAR10, CIFAR100, SMALL_REGIME, REGULAR_REGIME), (default: CIFAR10).--batch-size (int) - batch size, (default: 100).--dataset-mode (str) - which dataset you use, (example: CIFAR10, CIFAR100, MNIST), (default: CIFAR10).--is-train (bool) - True if training, False if test. (default: True).--load-model (bool) - (default: False).

python test.py

Options:

--p (float) - graph probability, (default: 0.75).--c (int) - channel count for each node, (example: 78, 109, 154), (default: 78).--k (int) - each node is connected to k nearest neighbors in ring topology, (default: 4).--m (int) - number of edges to attach from a new node to existing nodes, (default: 5).--graph-mode (str) - kinds of random graph, (exampple: ER, WS, BA), (default: WS).--node-num (int) - number of graph node (default n=32).--model-mode (str) - which network you use, (example: CIFAR10, CIFAR100, SMALL_REGIME, REGULAR_REGIME), (default: CIFAR10).--batch-size (int) - batch size, (default: 100).--dataset-mode (str) - which dataset you use, (example: CIFAR10, CIFAR100, MNIST), (default: CIFAR10).--is-train (bool) - True if training, False if test. (default: False).