🤖 Starter code to perform the room rearrangement task within AI2-THOR.

Welcome to the 2023 AI2-THOR Rearrangement Challenge hosted at the

CVPR’22 Embodied-AI Workshop.

The goal of this challenge is to build a model/agent that move objects in a room

to restore them to a given initial configuration. Please follow the instructions below

to get started.

If you have any questions please file an issue

or post in the #rearrangement-challenge channel on our Ask PRIOR slack.

Our 2023 AI2-THOR Rearrangement Challenge has several upgrades distinguishing it from the 2022 version:

The winners of the 2022 AI2-THOR Rearrangement Challenge and current state-of-the-art include:

Submission name: ProcTHOR + Fine-Tuning

% Fixed Strict (Test): 24.47%

Paper link: ProcTHOR: Large-Scale Embodied AI Using Procedural Generation (@NeurIPS’22)

Team: Matt Deitke, Eli VanderBilt, Alvaro Herrasti, Luca Weihs, Jordi Salvador, Kiana Ehsani, Winson Han, Eric Kolve, Ali Farhadi, Aniruddha Kembhavi, and Roozbeh Mottaghi

Submission name: MaSS: 3D Mapping and Semantic Search

% Fixed Strict (Test): 16.56%

Paper link: A Simple Approach for Visual Room Rearrangement: 3D Mapping and Semantic Search (@ICLR’23)

Codebase: https://github.com/brandontrabucco/mass

Team: Brandon Trabucco, Gunnar A Sigurdsson, Robinson Piramuthu, Gaurav S. Sukhatme, and Ruslan Salakhutdinov

Submission name: TIDEE + open everything

% Fixed Strict (Test): 28.94%

Paper link: TIDEE: Tidying Up Novel Rooms using Visuo-Semantic Commonsense Priors (@EECV’22)

Codebase: https://github.com/Gabesarch/TIDEE

Team: Gabriel Sarch, Zhaoyuan Fang, Adam W. Harley, Paul Schydlo, Michael Tarr, Saurabh Gupta, and Katerina Fragkiadaki

To begin, clone this repository locally

git clone git@github.com:allenai/ai2thor-rearrangement.git

Here’s a quick summary of the most important files/directories in this repository:

example.py an example script showing how rearrangement tasks can be instantiated for training and

validation.

baseline_configs/

- rearrange_base.py The base configuration file which defines the challenge

parameters (e.g. screen size, allowed actions, etc).

- one_phase/*.py - Baseline experiment configurations for the 1-phase challenge track.

- two_phase/*.py - Baseline experiment configurations for the 2-phase challenge track.

- walkthrough/*.py - Baseline experiment configurations if one wants to train the walkthrough

phase in isolation.

* rearrange/

- baseline_models.py - A collection of baseline models for the 1- and 2-phase challenge tasks. These

Actor-Critic models use a CNN->RNN architecture and can be trained using the experiment configs

under the baseline_configs/[one/two]_phase/ directories.

- constants.py - Constants used to define the rearrangement task. These include the step size

taken by the agent, the unique id of the the THOR build we use, etc.

- environment.py - The definition of the RearrangeTHOREnvironment class that wraps the AI2-THOR

environment and enables setting up rearrangement tasks.

- expert.py - The definition of a heuristic expert (GreedyUnshuffleExpert) which uses privileged

information (e.g. the scene graph & knowledge of exact object poses) to solve the rearrangement task.

This heuristic expert is meant to be used to produce expert actions for use with imitation learning

techinques. See the query_expert method within the rearrange.tasks.UnshuffleTask class for

an example of how such an action can be generated.

- losses.py - Losses (outside of those provided by AllenAct by default) used to train our

baseline agents.

- sensors.py - Sensors which provide observations to our agents during training. E.g. the

RGBRearrangeSensor obtains RGB images from the environment and returns them for use by the agent.

- tasks.py - Definitions of the UnshuffleTask, WalkthroughTask, and RearrangeTaskSampler classes.

For more information on how these are used, see the Setting up Rearrangement

section.

- utils.py - Standalone utility functions (e.g. computing IoU between 3D bounding boxes).

You can now either install the requirements into a local python environment or use the provided Dockerfile.

First create a python virtual environment and then install requirements by running

pip install -r requirements.txt

Or, if you prefer using conda, you can create a thor-rearrange environment with our requirements by running

export MY_ENV_NAME=thor-rearrangeexport CONDA_BASE="$(dirname $(dirname "${CONDA_EXE}"))"export PIP_SRC="${CONDA_BASE}/envs/${MY_ENV_NAME}/pipsrc"conda env create --file environment.yml --name $MY_ENV_NAME

conda env create —file environment.yml —name thor-rearrange by itself?

If you were to run conda env create --file environment.yml --name thor-rearrange nothing would break

but we have some pip requirements in our environment.yml file and, by default, these are saved in

a local ./src directory. By explicitly specifying the PIP_SRC variable we can have it place these

pip-installed packages in a nicer (more hidden) location.

This assumes some familiarity with Docker. If you are new to Docker, we recommend reading through

this tutorial.

You first need to make sure you have nvidia-docker installed on your machine. If you don’t, you can install it (assuming

you are running on Ubuntu) by running:

# Installing nvidia-container-toolkitcurl -s -L https://nvidia.github.io/nvidia-container-runtime/gpgkey | \sudo apt-key add -distribution=$(. /etc/os-release;echo $ID$VERSION_ID)curl -s -L https://nvidia.github.io/nvidia-container-runtime/$distribution/nvidia-container-runtime.list | \sudo tee /etc/apt/sources.list.d/nvidia-container-runtime.listsudo apt-get updatesudo apt-get install -y nvidia-container-toolkit-base nvidia-container-toolkitsudo systemctl restart docker

Now cd into the ai2thor-rearrangement repository and then build the docker image by running

DOCKER_BUILDKIT=1 docker build -t rearrangement:latest .

to create a docker image with name rearrangement. Note that the Dockerfile will automatically

copy the contents of the ai2thor-rearrangement repository into the docker image. You can, of course, modify

the Dockerfile to copy in additional files/directories as needed or mount the ai2thor-rearrangement repository

directory on the docker container (see the Docker documentation for

more information) so that any changes you make to your local copy of the repository are reflected in the docker

container (and vice versa).

Now to start the docker container, you can run:

docker run \--gpus all \--device /dev/dri \--mount type=bind,source=/usr/share/vulkan/icd.d/nvidia_icd.json,target=/etc/vulkan/icd.d/nvidia_icd.json \--mount type=bind,source=/usr/share/vulkan/icd.d/nvidia_layers.json,target=/etc/vulkan/implicit_layer.d/nvidia_layers.json \--mount type=bind,source=/usr/share/glvnd/egl_vendor.d/10_nvidia.json,target=/usr/share/glvnd/egl_vendor.d/10_nvidia.json \--shm-size 50G \-it rearrangement:latest

Please set the shared memory size (--shm-size) to something that your machine can support. Setting this too small can cause

problems in multi-GPU training. Note that, importantly, we are mounting the nvidia_icd.json, nvidia_layers.json, and 10_nvidia.json files

from the host machine into the docker container. This is necessary to ensure that the docker container can use

the Vulkan API (which is used by AI2-THOR). The above assumes that your machine has a working Vulkan installation

(modern versions of Ubuntu come with this pre-installed) and that the above files are present at the

/usr/share/vulkan/icd.d/nvidia_icd.json/usr/share/vulkan/icd.d/nvidia_layers.json/usr/share/glvnd/egl_vendor.d/10_nvidia.json

paths. On some machines these files may be located at different paths. If you are

running into issues with the above, you can try checking to see if the above files exist instead at the paths:

/etc/vulkan/icd.d/nvidia_icd.json,target/etc/vulkan/implicit_layer.d/nvidia_layers.json/usr/share/glvnd/egl_vendor.d/10_nvidia.json

or use the find command to search for these files:

find / -name nvidia_icd.jsonfind / -name nvidia_layers.jsonfind / -name 10_nvidia.json

Once you find the correct paths, you’ll need to then modify the above docker run command accordingly.

Now that you’ve successfully run the docker container, you can run the following to test that everything is working:

conda activate rearrangeexport PYTHONPATH=$PYTHONPATH:$PWDallenact -o rearrange_out -b . baseline_configs/one_phase/one_phase_rgb_resnet_dagger.py

You should see output that looks like the following

[05/23 15:47:34 INFO:] Running with args Namespace(experiment='baseline_configs/one_phase/one_phase_rgb_resnet_dagger.py', eval=False, config_kwargs=None, extra_tag='', output_dir='rearrange_out', save_dir_fmt=<SaveDirFormat.FLAT: 'FLAT'>, seed=None, experiment_base='.', checkpoint=None, infer_output_dir=False, approx_ckpt_step_interval=None, restart_pipeline=False, deterministic_cudnn=False, max_sampler_processes_per_worker=None, deterministic_agents=False, log_level='info', disable_tensorboard=False, disable_config_saving=False, collect_valid_results=False, valid_on_initial_weights=False, test_expert=False, distributed_ip_and_port='127.0.0.1:0', machine_id=0, callbacks='', enable_crash_recovery=False, test_date=None, approx_ckpt_steps_count=None, skip_checkpoints=0) [main.py: 452][05/23 15:47:35 INFO:] Config files saved to rearrange_out/used_configs/OnePhaseRGBResNetDagger_40proc/2023-05-23_15-47-35 [runner.py: 865][05/23 15:47:35 INFO:] Using 8 train workers on devices (device(type='cuda', index=0), device(type='cuda', index=1), device(type='cuda', index=2), device(type='cuda', index=3), device(type='cuda', index=4), device(type='cuda', index=5), device(type='cuda', index=6), device(type='cuda', index=7)) [runner.py: 274][05/23 15:47:35 INFO:] Engines on machine_id == 0 using port 53495 and seed 137964697 [runner.py: 444][05/23 15:47:35 INFO:] Using local worker ids [0, 1, 2, 3, 4, 5, 6, 7] (total 8 workers in machine 0) [runner.py: 283][05/23 15:47:36 INFO:] Started 8 train processes [runner.py: 545][05/23 15:47:36 INFO:] Using 1 valid workers on devices (device(type='cuda', index=7),) [runner.py: 274][05/23 15:47:36 INFO:] Started 1 valid processes [runner.py: 572][05/23 15:47:39 INFO:] train 1 args {'experiment_name': 'OnePhaseRGBResNetDagger_40proc', 'config': <baseline_configs.one_phase.one_phase_rgb_resnet_dagger.OnePhaseRGBResNetDaggerExperimentConfig object at 0x7efd483fac70>, 'callback_sensors': [], 'results_queue': <multiprocessing.queues.Queue object at 0x7efd483facd0>, 'checkpoints_queue': <multiprocessing.queues.Queue object at 0x7efd268d5be0>, 'checkpoints_dir': 'rearrange_out/checkpoints/OnePhaseRGBResNetDagger_40proc/2023-05-23_15-47-35', 'seed': 137964697, 'deterministic_cudnn': False, 'mp_ctx': <multiprocessing.context.ForkServerContext object at 0x7efd268dd430>, 'num_workers': 8, 'device': device(type='cuda', index=1), 'distributed_ip': '127.0.0.1', 'distributed_port': 53495, 'max_sampler_processes_per_worker': None, 'save_ckpt_after_every_pipeline_stage': True, 'initial_model_state_dict': '[SUPPRESSED]', 'first_local_worker_id': 0, 'distributed_preemption_threshold': 0.7, 'try_restart_after_task_error': False, 'mode': 'train', 'worker_id': 1[runner.py: 373](...)[05/23 15:53:14 INFO:] TRAIN: 22295 rollout steps ({'onpolicy': 22295}) total_loss 3.17 global_batch_size 2.48e+03 lr 0.0003 rollout_epochs 3 rollout_num_mini_batch 1 worker_batch_size 312 unshuffle/change_energy 2.49 unshuffle/end_energy 0.153 unshuffle/energy_prop 0.058 unshuffle/ep_length 34.8 unshuffle/num_broken 0 unshuffle/num_changed 2.88 unshuffle/num_fixed 2.78 unshuffle/num_initially_misplaced 2.94 unshuffle/num_misplaced 0.166 unshuffle/num_newly_misplaced 0 unshuffle/prop_fixed 0.944 unshuffle/prop_fixed_strict 0.944 unshuffle/prop_misplaced 0.0557 unshuffle/reward 2.26 unshuffle/start_energy 2.56 unshuffle/success 0.861 teacher_ratio/enforced 1 teacher_ratio/sampled 1 imitation_loss/expert_cross_entropy 3.17 elapsed_time 338s [runner.py: 1089][05/23 15:56:07 INFO:] TRAIN: 44475 rollout steps ({'onpolicy': 44475}) total_loss 2.48 global_batch_size 2.47e+03 lr 0.0003 rollout_epochs 3 rollout_num_mini_batch 1 worker_batch_size 311 unshuffle/change_energy 2.48 unshuffle/end_energy 0.183 unshuffle/energy_prop 0.0617 unshuffle/ep_length 44.8 unshuffle/num_broken 0 unshuffle/num_changed 2.88 unshuffle/num_fixed 2.79 unshuffle/num_initially_misplaced 2.99 unshuffle/num_misplaced 0.197 unshuffle/num_newly_misplaced 0 unshuffle/prop_fixed 0.94 unshuffle/prop_fixed_strict 0.94 unshuffle/prop_misplaced 0.0605 unshuffle/reward 2.3 unshuffle/start_energy 2.6 unshuffle/success 0.865 teacher_ratio/enforced 1 teacher_ratio/sampled 1 imitation_loss/expert_cross_entropy 2.48 elapsed_time 173s approx_fps 128 onpolicy/approx_eps 128 [runner.py: 1089]

Note that it may take several minute before the lines with TRAIN: start appearing, this is because these only

print after many thousands of steps have been taken. This can be annoying when debugging, if you’d like to print these

logs more frequently you should change the metric_accumulate_interval argument

to the TrainingPipeline in the baseline_configs/rearrange_base.py file to be some small integer value (e.g. metric_accumulate_interval=1)

Python 3.6+ 🐍. Each of the actions supports typing within Python.

AI2-THOR 5.0.0 🧞. To ensure reproducible results, we’re restricting all users to use the exact same version of AI2-THOR.

AllenAct 🏋💪. We ues the AllenAct reinforcement learning framework

for generating baseline models, baseline training pipelines, and for several of their helpful abstractions/utilities.

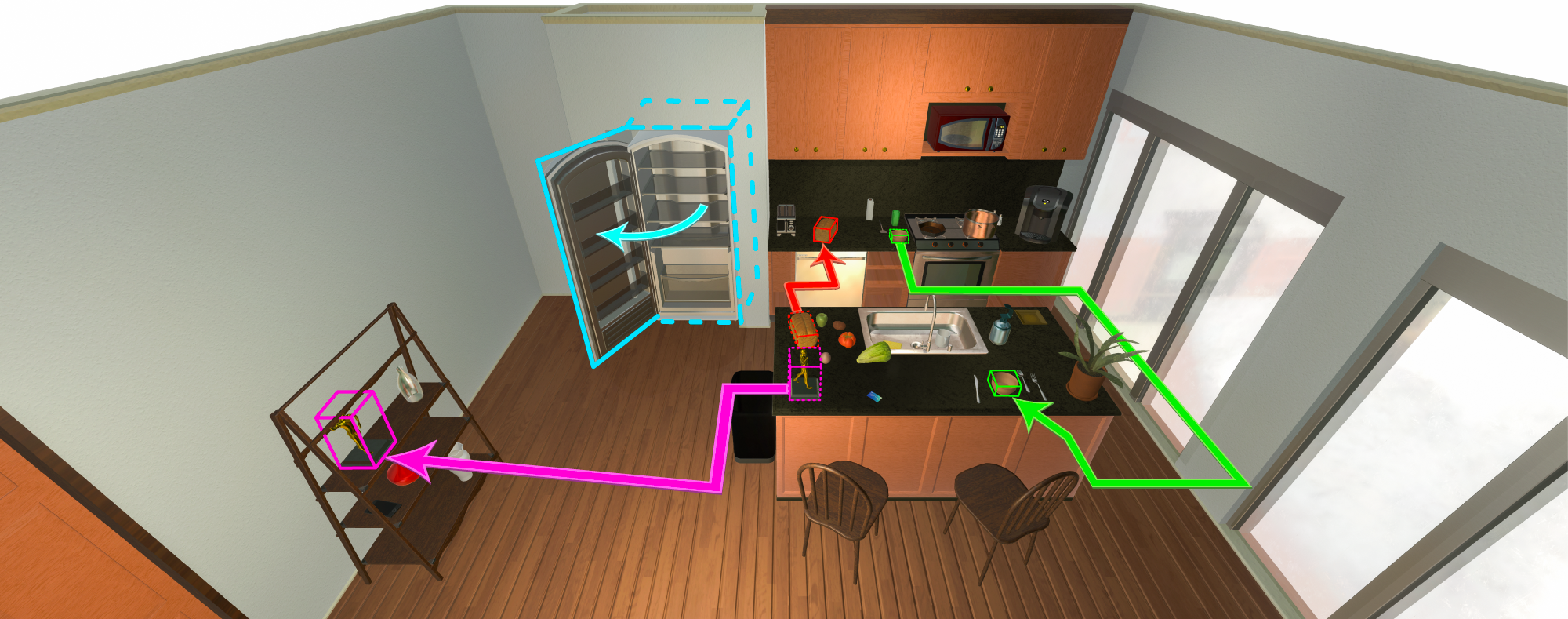

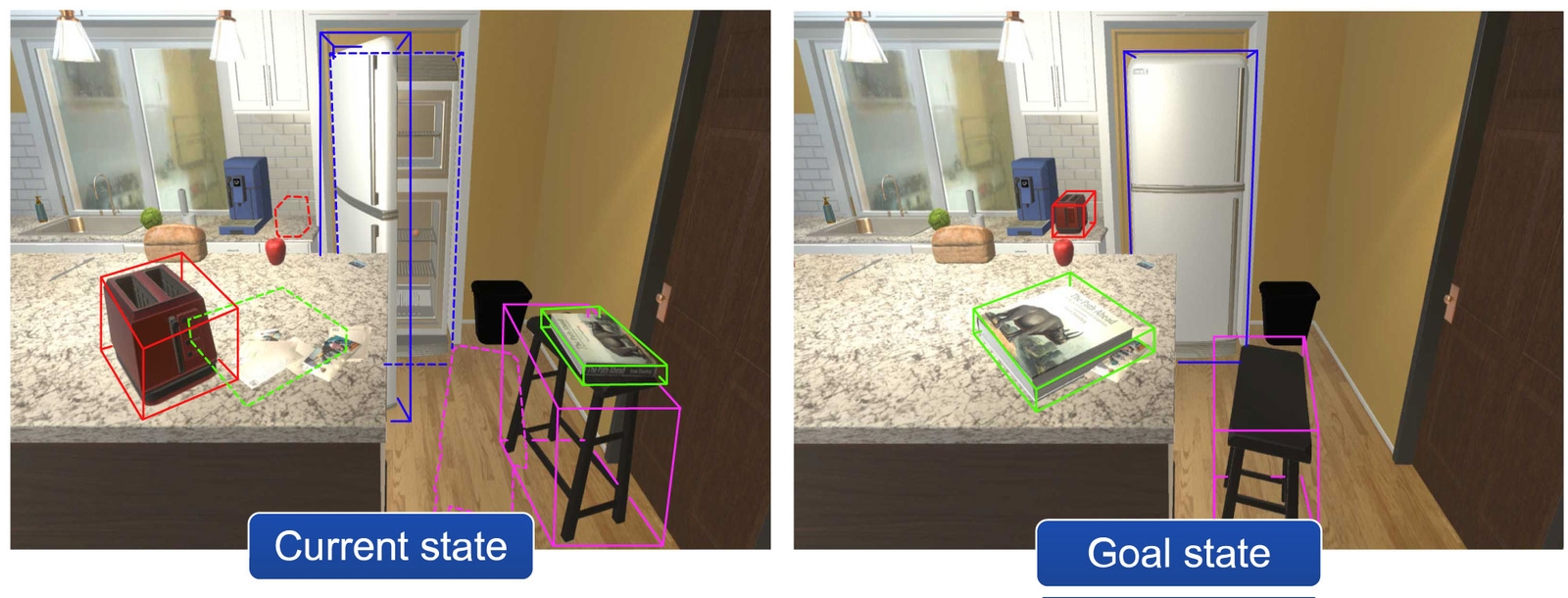

Overview 🤖. Our rearrangement task involves moving and modifying (i.e. opening/closing) randomly placed objects within a room

to obtain a goal configuration. There are 2 phases:

As in prior years, for this 2023 challenge we have two distinct tracks:

For this challenge we have three dataset splits: "train", "val", and "test".

The train split uses floor plans 1-20, 200-220, 300-320, and 400-420 within AI2-THOR,

the "val" split uses floor plans 21-25, 221-225, 321-325, and 421-425, and finally the "test" split uses

scenes 26-30, 226-230, 326-330, and 426-430. These dataset splits are stored as the compressed pickle-serialized filesdata/*.pkl.gz. While you are freely (and encouraged) to enhance the training set as you see fit, you should

never train your agent within any of the test scenes.

For evaluation, your model will need to be evaluated on each of the above splits and the results

submitted to our leaderboard link (see section below). As the "train" set is

are quite large, we do not expect you to evaluate on their entirety. Instead we select ~1000 datapoints

from each of these sets for use in evaluation. For convenience, we provide the data/combined.pkl.gz

file which contains the "train", "val", and "test" datapoints that should

be used for evaluation.

| Split | # Total Episodes | # Episodes for Eval | Path |

|---|---|---|---|

| train | 4000 | 800 | data/2023/train.pkl.gz |

| val | 1000 | 1000 | data/2023/val.pkl.gz |

| test | 1000 | 1000 | data/2023/test.pkl.gz |

| combined | 2800 | 2800 | data/2023/combined.pkl.gz |

We are tracking challenge participant entries using the AI2 Leaderboard. The team

with the best submission made to either of the below leaderboards by June 12th

(midnight, anywhere on earth) will be announced at the

CVPR’21 Embodied-AI Workshop and invited to produce a video describing their approach.

Note that a winning submission must be materially different from the baseline models we provide and from

submissions made to prior years’ challenges.

In particular, our 2023 leaderboard links can be found at

Our older (2021/2022) leaderboards are also available indefinitely

(2021 1-phase,

2021 2-phase,

2022 1-phase,

2022 2-phase).

Note that our 2022/2021 challenges uses different datasets and older versions of AI2-THOR and so results will not be

directly comparable.

Submissions should include your agent’s trajectories for all tasks contained within the combined.pkl.gz

dataset, this “combined” dataset includes tasks for the train, train_unseen, validation, and test sets. For an example

as to how to iterate through all the datapoints in this dataset and save the resulting

metrics in our expected submission format see here.

A (full) example the expected submission format for the 1-phase task can be found here

and, for the 2-phase task, can be found here.

Note that this submission format is a gzip’ed json file where the json file has the form

{"UNIQUE_ID_OF_TASK_0": YOUR_AGENTS_METRICS_AND_TRAJECTORY_FOR_TASK_0,"UNIQUE_ID_OF_TASK_1": YOUR_AGENTS_METRICS_AND_TRAJECTORY_FOR_TASK_1,...}

these metrics and unique IDs can be easily obtained when iterating over the dataset (see the above example).

Alternatively: if you run inference on the combined dataset using AllenAct (see below

for more details) then you can simply (1) gzip the metrics*.json file saved when running inference, (2) rename

this file submission.json.gz, and (3) submit this file to the leaderboard directly.

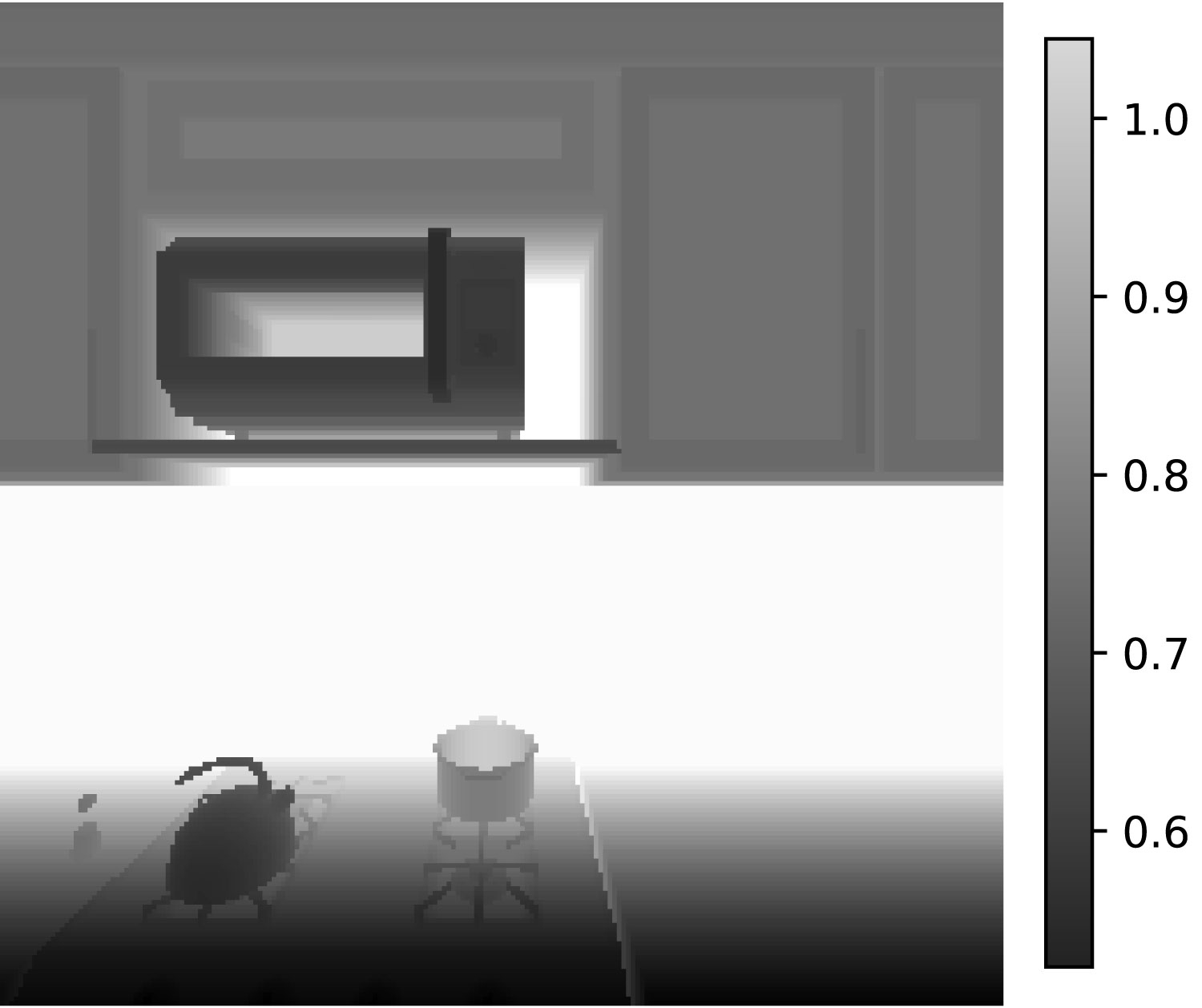

In both of these tracks, agents should make decisions based off of egocentric sensor readings. The

types of sensors allowed/provided for this challenge include:

224x224x3 and an FOV of 90 degrees. 224x224 and an FOV of 90 degrees.While you are absolutely free to use any sensor information you would like during training (e.g.

pretraining your CNN using semantic segmentations from AI2-THOR or using a scene graph to compute

expert actions for imitation learning) such additional sensor information should not

be used at inference time.

A total of 82 actions are available to our agents, these include:

Navigation

Move[Ahead/Left/Right/Back] - Results in the agent moving 0.25m in the specified direction if doing so would

not result in the agent colliding with something.

Rotate[Right/Left] - Results in the agent rotating 90 degrees clockwise (if Right) or counterclockwise (if Left).

This action may fail if the agent is holding an object and rotating would cause the object to collide.

Look[Up/Down] - Results in the agent raising or lowering its camera angle by 30 degrees (up to a max of 60 degrees below horizontal and 30 degrees above horizontal).

Object Interaction

Pickup[OBJECT_TYPE] - Where OBJECT_TYPE is one of the 62 pickupable object types, see constants.py.

This action results in the agent picking up a visible object of type OBJECT_TYPE if: (a) the agent is not already

holding an object, (b) the agent is close enough to the object (within 1.5m), and picking up the object would not

result in it colliding with objects in front of the agent. If there are multiple objects of type OBJECT_TYPE

then the object closest to the agent is chosen.

Open[OBJECT_TYPE] - Where OBJECT_TYPE is one of the 10 opennable object types that are not also

pickupable, see constants.py. If an object of the given type is visible (and within 1.5m of the agent), its openness will be toggled between

two possible values. If multiple objects of the same type are visible, we decide which object will be toggled using priorty value that is randomly

generated for each object at the start of the episode. Note that objects whose goal state at the start of the unshuffle

phase are different from those in the walkthrough phase are always assigned higher priority than other objects so that

it is always possible to bring them into their goal state.

PlaceObject - Results in the agent dropping its held object. If the held object’s goal state is visible and within

1.5m of the agent, it is placed into that goal state. Otherwise, a heuristic is used to place the

object on a nearby surface if possible.

Done action

Done - Results in the walkthrough or unshuffle phase immediately terminating.See the example.py file for an example of how you can instantiate the 1- and 2-phase

variants of our rearrangement task.

The rearrange.environment.RearrangeTHOREnvironment class provides a wrapper around the AI2-THOR environment

and is designed to

poses and compare_poses methods)You’ll notice that the above RearrangeTHOREnvironment is not explicitly instantiated by the example.py

script and, instead, we create rearrange.tasks.RearrangeTaskSampler objects using theTwoPhaseRGBBaseExperimentConfig.make_sampler_fn and OnePhaseRGBBaseExperimentConfig.make_sampler_fn.

This is because the RearrangeTHOREnvironment is very flexible and doesn’t know anything about

training/validation/test datasets, the types of actions we want our agent to be restricted to use,

or precisely which types of sensor observations we want to give our agents (e.g. RGB images, depth maps, etc).

All of these extra details are managed by the RearrangeTaskSampler which iteratively creates new

tasks for our agent to complete when calling the next_task method. During training, these new tasks can be sampled

indefinitely while, during validation or testing, the tasks will only be sampled until the validation/test datasets

are exhausted. This sampling is best understood by example so please go over the example.py file.

As described above, the RearrangeTaskSampler samples tasks for our agent to complete, these tasks correspond

to instantiations of the rearrange.tasks.WalkthroughTask and rearrange.tasks.UnshuffleTask classes. For the 2-phase

challenge track, the RearrangeTaskSampler will first sample a new WalkthroughTask after which it will sample a

corresponding UnshuffleTask where the agent must return the objects to their poses at the start of theWalkthroughTask.

Accessing object poses 🧘. The poses of all objects in the environment can be accessed

using the RearrangeTHOREnvironment.poses property, i.e.

unshuffle_start_poses, walkthrough_start_poses, current_poses = env.poses # where env is an RearrangeTHOREnvironment instance

Reading an object’s pose 📖. Here, unshuffle_start_poses, walkthrough_start_poses, and current_poses

evaluate to a list of dictionaries and are defined as:

unshuffle_start_poses stores a list of object poses if the agent were to do nothing toenv during the unshuffling phase.walkthrough_start_poses stores a list of object poses that the agent sees during the walkthrough phase.current_poses stores a list of object poses in the current state of the environment (i.e. possibly after theenv during the unshuffling phase).Each dictionary contains the object’s pose in a form similar to:

{"type": "Candle","position": {"x": -0.3012670874595642,"y": 0.7431036233901978,"z": -2.040205240249634},"rotation": {"x": 2.958569288253784,"y": 0.027708930894732475,"z": 0.6745457053184509},"openness": None,"pickupable": True,"broken": False,"objectId": "Candle|-00.30|+00.74|-02.04","name": "Candle_977f7f43","parentReceptacles": ["Bathtub|-01.28|+00.28|-02.53"],"bounding_box": [[-0.27043721079826355, 0.6975823640823364, -2.0129783153533936],[-0.3310248851776123, 0.696869969367981, -2.012985944747925],[-0.3310534358024597, 0.6999208927154541, -2.072017192840576],[-0.27046576142311096, 0.7006332278251648, -2.072009563446045],[-0.272365003824234, 0.8614493608474731, -2.0045082569122314],[-0.3329526484012604, 0.8607369661331177, -2.0045158863067627],[-0.3329811990261078, 0.8637878894805908, -2.063547134399414],[-0.27239352464675903, 0.8645002245903015, -2.063539505004883]]}

Matching objects across poses 🤝. Across unshuffle_start_poses, walkthrough_start_poses, and current_poses,

the ith entry in each list will always correspond to the same object across each pose list.

So, unshuffle_start_poses[5] will refer to the same object as walkthrough_start_poses[5] and current_poses[5].

Most scenes have around 70 objects, among which, 10 to 20 are pickupable by the agent.

Pose keys 🔑.

openness specifies the [0:1] percentage that an object is opened. For objects where the openness value does notBowl, Spoon), the openness value is None.bounding_box is only given for moveable objects, where the set of moveable objects may consist of couches or chairs,broken states if the object broke from the agent’s actions during the unshuffling phase. The initial pose orTo evaluate the quality of a rearrangement agent we compute several metrics measuring how well the agent

has managed to move objects so that their final poses are (approximately) equal to their goal poses.

Recall that we represent the pose of an object as a combination of its:

The openness between its goal state and predicted state is off by less than 20 percent. The openness check is only applied to objects that can open.

The object’s 3D bounding box from its goal pose and the predicted pose must have an IoU over 0.5. The positional check is only relevant to objects that can move.

To measure if two object poses are approximately equal we use the following criterion:

Suppose that task is an instance of an UnshuffleTask which your agent has taken

actions until reaching a terminal state (e.g. either the agent has taken the maximum number of steps or it

has taken the "done" action). Then metrics regarding the agent’s performance can be computed by calling

the task.metrics() function. This will return a dictionary of the form

{"task_info": {"scene": "FloorPlan420","index": 7,"stage": "train"},"ep_length": 176,"unshuffle/ep_length": 7,"unshuffle/reward": 0.5058389582634852,"unshuffle/start_energy": 0.5058389582634852,"unshuffle/end_energy": 0.0,"unshuffle/prop_fixed": 1.0,"unshuffle/prop_fixed_strict": 1.0,"unshuffle/num_misplaced": 0,"unshuffle/num_newly_misplaced": 0,"unshuffle/num_initially_misplaced": 1,"unshuffle/num_fixed": 1,"unshuffle/num_broken": 0,"unshuffle/change_energy": 0.5058464936498058,"unshuffle/num_changed": 1,"unshuffle/prop_misplaced": 0.0,"unshuffle/energy_prop": 0.0,"unshuffle/success": 0.0,"walkthrough/ep_length": 169,"walkthrough/reward": 1.82,"walkthrough/num_explored_xz": 17,"walkthrough/num_explored_xzr": 46,"walkthrough/prop_visited_xz": 0.5151515151515151,"walkthrough/prop_visited_xzr": 0.3484848484848485,"walkthrough/num_obj_seen": 11,"walkthrough/prop_obj_seen": 0.9166666666666666}

Of the above metrics, the most important (those used for comparing models) are

"unshuffle/success") - This is the most unforgiving of our metrics and equals 1 if all"unshuffle/prop_misplaced") - The above sucess metric is quite strict, requiring exact"unshuffle/prop_fixed_strict") - This metric equals 0 if, at the end of the unshuffle task,"unshuffle/energy_prop") - The above metrics do not give any partial credit if,D that monotonically decreases to 0 as two poses get closer together (see code for full details) and which equalsWe use the AllenAct framework for training our baseline rearrangement models,

this framework is automatically installed when installing the requirements for this project.

Before running training or inference you’ll first have to add the ai2thor-rearrangement directory

to your PYTHONPATH (so that python and AllenAct knows where to for various modules).

To do this you can run the following:

cd YOUR/PATH/TO/ai2thor-rearrangementexport PYTHONPATH=$PYTHONPATH:$PWD

Let’s say you want to train a model for the 1-phase challenge. This can be easily done by running the command

allenact -o rearrange_out -b . baseline_configs/one_phase/one_phase_rgb_resnet_dagger.py

This will train (using DAgger, a form of imitation learning) a model which uses a pretrained (with frozen

weights) ResNet18 as the visual backbone that feeds into a recurrent neural network (a GRU) before

producing action probabilities and a value estimate. Results from this training are then saved torearrange_out where you can find model checkpoints, tensorboard plots, and configuration files that can

be used if you, in the future, forget precisely what the details of your experiment were.

A similar model can be trained for the 2-phase challenge by running

allenact -o rearrange_out -b . baseline_configs/two_phase/two_phase_rgb_resnet_ppowalkthrough_ilunshuffle.py

In the below table we provide a collection of pretrained models from:

We have only evaluated a subset of these models on our 2022 dataset.

| Model | % Fixed Strict (2023 dataset, test) | % Fixed Strict (2022 dataset, test) | % Fixed Strict (2021 dataset, test) | Pretrained Model |

|---|---|---|---|---|

| 1-Phase Embodied CLIP ResNet50 IL | 13.6% | 19.1% | 17.3% | (link) |

| 1-Phase ResNet18+ANM IL | - | - | 8.9% | (link) |

| 1-Phase ResNet50 IL | - | - | 7.0% | (link) |

| 1-Phase ResNet18 IL | - | - | 6.3% | (link) |

| 1-Phase ResNet18 PPO | - | - | 5.3% | (link) |

| 1-Phase Simple IL | - | - | 4.8% | (link) |

| 1-Phase Simple PPO | - | - | 4.6% | (link) |

| 2-Phase ResNet18+ANM IL+PPO | - | 0.53% | 1.44% | (link) |

| 2-Phase ResNet18 IL+PPO | - | - | 0.66% | (link) |

These models can be downloaded at from the above links and should be placed into the pretrained_model_ckpts directory.

You can then, for example, run inference for the 1-Phase ResNet18 IL model using AllenAct by running:

export CURRENT_TIME=$(date '+%Y-%m-%d_%H-%M-%S') # This is just to record when you ran this inferenceallenact baseline_configs/one_phase/one_phase_rgb_resnet_dagger.py \-c pretrained_model_ckpts/exp_OnePhaseRGBResNetDagger_40proc__stage_00__steps_000050058550.pt \--extra_tag $CURRENT_TIME \--eval

this will evaluate this model across all datapoints in the data/combined.pkl.gz dataset

which contains data from the train, val, and test sets so that

evaluation doesn’t have to be run on each set separately.

If you use this work, please cite our CVPR’21 paper:

@InProceedings{RoomR,author = {Luca Weihs and Matt Deitke and Aniruddha Kembhavi and Roozbeh Mottaghi},title = {Visual Room Rearrangement},booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},month = {June},year = {2021}}