reference code for syntaxnet

Table of Contents generated with DocToc

2017. 3. 27

python : 2.7bazel : 0.4.3protobuf : 3.2.0syntaxnet : 40a5739ae26baf6bfa352d2dec85f5ca190254f8

2017. 3. 10

python : 2.7bazel : 0.4.3protobuf : 3.0.0b2, 3.2.0syntaxnet : bc70271a51fe2e051b5d06edc6b9fd94880761d5

2016. 8. 16

syntaxnet : a5d45f2ed20effaabc213a2eb9def291354af1ec

# after installing syntaxnet.# gpu supporting : https://github.com/tensorflow/models/issues/248#issuecomment-288991859$ pwd/path/to/models/syntaxnet$ git clone https://github.com/dsindex/syntaxnet.git work$ cd work$ echo "hello syntaxnet" | ./demo.sh# training parser only with parsed corpus$ ./parser_trainer_test.sh

$ cd work$ mkdir corpus$ cd corpus# downloading ud-treebanks-v2.0.tgz$ tar -zxvf ud-treebanks-v2.0.tgz$ ls universal-dependencies-2.0$ UD_Ancient_Greek UD_Basque UD_Czech ....

# for example, training UD_English.# detail instructions can be found in https://github.com/tensorflow/models/tree/master/syntaxnet$ ./train.sh -v -v...#preprocessing with taggerINFO:tensorflow:Seconds elapsed in evaluation: 9.77, eval metric: 99.71%INFO:tensorflow:Seconds elapsed in evaluation: 1.26, eval metric: 92.04%INFO:tensorflow:Seconds elapsed in evaluation: 1.26, eval metric: 92.07%...#pretrain parserINFO:tensorflow:Seconds elapsed in evaluation: 4.97, eval metric: 82.20%...#evaluate pretrained parserINFO:tensorflow:Seconds elapsed in evaluation: 44.30, eval metric: 92.36%INFO:tensorflow:Seconds elapsed in evaluation: 5.42, eval metric: 82.67%INFO:tensorflow:Seconds elapsed in evaluation: 5.59, eval metric: 82.36%...#train parserINFO:tensorflow:Seconds elapsed in evaluation: 57.69, eval metric: 83.95%...#evaluate parserINFO:tensorflow:Seconds elapsed in evaluation: 283.77, eval metric: 96.54%INFO:tensorflow:Seconds elapsed in evaluation: 34.49, eval metric: 84.09%INFO:tensorflow:Seconds elapsed in evaluation: 34.97, eval metric: 83.49%...

# if you have other pos-tagger and want to build parser only from the parsed corpus :$ ./train_p.sh -v -v...#pretrain parser...#evaluate pretrained parserINFO:tensorflow:Seconds elapsed in evaluation: 44.15, eval metric: 92.21%INFO:tensorflow:Seconds elapsed in evaluation: 5.56, eval metric: 87.84%INFO:tensorflow:Seconds elapsed in evaluation: 5.43, eval metric: 86.56%...#train parser...#evaluate parserINFO:tensorflow:Seconds elapsed in evaluation: 279.04, eval metric: 94.60%INFO:tensorflow:Seconds elapsed in evaluation: 33.19, eval metric: 88.60%INFO:tensorflow:Seconds elapsed in evaluation: 32.57, eval metric: 87.77%...

$ echo "this is my own tagger and parser" | ./test.sh...Input: this is my own tagger and parserParse:tagger NN ROOT+-- this DT nsubj+-- is VBZ cop+-- my PRP$ nmod:poss+-- own JJ amod+-- and CC cc+-- parser NN conj# original model$ echo "this is my own tagger and parser" | ./demo.shInput: this is my own tagger and parserParse:tagger NN ROOT+-- this DT nsubj+-- is VBZ cop+-- my PRP$ poss+-- own JJ amod+-- and CC cc+-- parser ADD conj$ echo "Bob brought the pizza to Alice ." | ./test.shInput: Bob brought the pizza to Alice .Parse:brought VBD ROOT+-- Bob NNP nsubj+-- pizza NN dobj| +-- the DT det+-- Alice NNP nmod| +-- to IN case+-- . . punct# original model$ echo "Bob brought the pizza to Alice ." | ./demo.shInput: Bob brought the pizza to Alice .Parse:brought VBD ROOT+-- Bob NNP nsubj+-- pizza NN dobj| +-- the DT det+-- to IN prep| +-- Alice NNP pobj+-- . . punct

# the corpus is accessible through the path on this image : https://raw.githubusercontent.com/dsindex/blog/master/images/url_sejong.png# copy sejong_treebank.txt.v1 to `sejong` directory.$ ./sejong/split.sh$ ./sejong/c2d.sh$ ./train_sejong.sh#pretrain parser...NFO:tensorflow:Seconds elapsed in evaluation: 14.18, eval metric: 93.43%...#evaluate pretrained parserINFO:tensorflow:Seconds elapsed in evaluation: 116.08, eval metric: 95.11%INFO:tensorflow:Seconds elapsed in evaluation: 14.60, eval metric: 93.76%INFO:tensorflow:Seconds elapsed in evaluation: 14.45, eval metric: 93.78%...#evaluate pretrained parser by eoj-basedaccuracy(UAS) = 0.903289accuracy(UAS) = 0.876198accuracy(UAS) = 0.876888...#train parserINFO:tensorflow:Seconds elapsed in evaluation: 137.36, eval metric: 94.12%...#evaluate parserINFO:tensorflow:Seconds elapsed in evaluation: 1806.21, eval metric: 96.37%INFO:tensorflow:Seconds elapsed in evaluation: 224.40, eval metric: 94.19%INFO:tensorflow:Seconds elapsed in evaluation: 223.75, eval metric: 94.25%...#evaluate parser by eoj-basedaccuracy(UAS) = 0.928845accuracy(UAS) = 0.886139accuracy(UAS) = 0.887824...

$ cat sejong/tagged_input.sample1 프랑스 프랑스 NNP NNP _ 0 _ _ _2 의 의 JKG JKG _ 0 _ _ _3 세계 세계 NNG NNG _ 0 _ _ _4 적 적 XSN XSN _ 0 _ _ _5 이 이 VCP VCP _ 0 _ _ _6 ᆫ ᆫ ETM ETM _ 0 _ _ _7 의상 의상 NNG NNG _ 0 _ _ _8 디자이너 디자이너 NNG NNG _ 0 _ _ _9 엠마누엘 엠마누엘 NNP NNP _ 0 _ _ _10 웅가로 웅가로 NNP NNP _ 0 _ _ _11 가 가 JKS JKS _ 0 _ _ _12 실내 실내 NNG NNG _ 0 _ _ _13 장식 장식 NNG NNG _ 0 _ _ _14 용 용 XSN XSN _ 0 _ _ _15 직물 직물 NNG NNG _ 0 _ _ _16 디자이너 디자이너 NNG NNG _ 0 _ _ _17 로 로 JKB JKB _ 0 _ _ _18 나서 나서 VV VV _ 0 _ _ _19 었 었 EP EP _ 0 _ _ _20 다 다 EF EF _ 0 _ _ _21 . . SF SF _ 0 _ _ _$ cat sejong/tagged_input.sample | ./test_sejong.sh -v -vInput: 프랑스 의 세계 적 이 ᆫ 의상 디자이너 엠마누엘 웅가로 가 실내 장식 용 직물 디자이너 로 나서 었 다 .Parse:. SF ROOT+-- 다 EF MOD+-- 었 EP MOD+-- 나서 VV MOD+-- 가 JKS NP_SBJ| +-- 웅가로 NNP MOD| +-- 디자이너 NNG NP| | +-- 의 JKG NP_MOD| | | +-- 프랑스 NNP MOD| | +-- ᆫ ETM VNP_MOD| | | +-- 이 VCP MOD| | | +-- 적 XSN MOD| | | +-- 세계 NNG MOD| | +-- 의상 NNG NP| +-- 엠마누엘 NNP NP+-- 로 JKB NP_AJT+-- 디자이너 NNG MOD+-- 직물 NNG NP+-- 실내 NNG NP+-- 용 XSN NP+-- 장식 NNG MOD

# after installing konlpy ( http://konlpy.org/ko/v0.4.3/ )$ python sejong/tagger.py나는 학교에 간다.1 나 나 NP NP _ 0 _ _ _2 는 는 JX JX _ 0 _ _ _3 학교 학교 NNG NNG _ 0 _ _ _4 에 에 JKB JKB _ 0 _ _ _5 가 가 VV VV _ 0 _ _ _6 ㄴ다 ㄴ다 EF EF _ 0 _ _ _7 . . SF SF _ 0 _ _ _$ echo "나는 학교에 간다." | python sejong/tagger.py | ./test_sejong.shInput: 나 는 학교 에 가 ㄴ다 .Parse:. SF ROOT+-- ㄴ다 EF MOD+-- 가 VV MOD+-- 는 JX NP_SBJ| +-- 나 NP MOD+-- 에 JKB NP_AJT+-- 학교 NNG MOD

$ bazel-bin/tensorflow_serving/example/parsey_client --server=localhost:9000나는 학교에 간다Input : 나는 학교에 간다Parsing :{"result": [{"text": "나 는 학교 에 가 ㄴ다", "token": [{"category": "NP", "head": 1, "end": 2, "label": "MOD", "start": 0, "tag": "NP", "word": "나"}, {"category": "JX", "head": 4, "end": 6, "label": "NP_SBJ", "start": 4, "tag": "JX", "word": "는"}, {"category": "NNG", "head": 3, "end": 13, "label": "MOD", "start": 8, "tag": "NNG", "word": "학교"}, {"category": "JKB", "head": 4, "end": 17, "label": "NP_AJT", "start": 15, "tag": "JKB", "word": "에"}, {"category": "VV", "head": 5, "end": 21, "label": "MOD", "start": 19, "tag": "VV", "word": "가"}, {"category": "EC", "end": 28, "label": "ROOT", "start": 23, "tag": "EC", "word": "ㄴ다"}], "docid": "-:0"}]}...

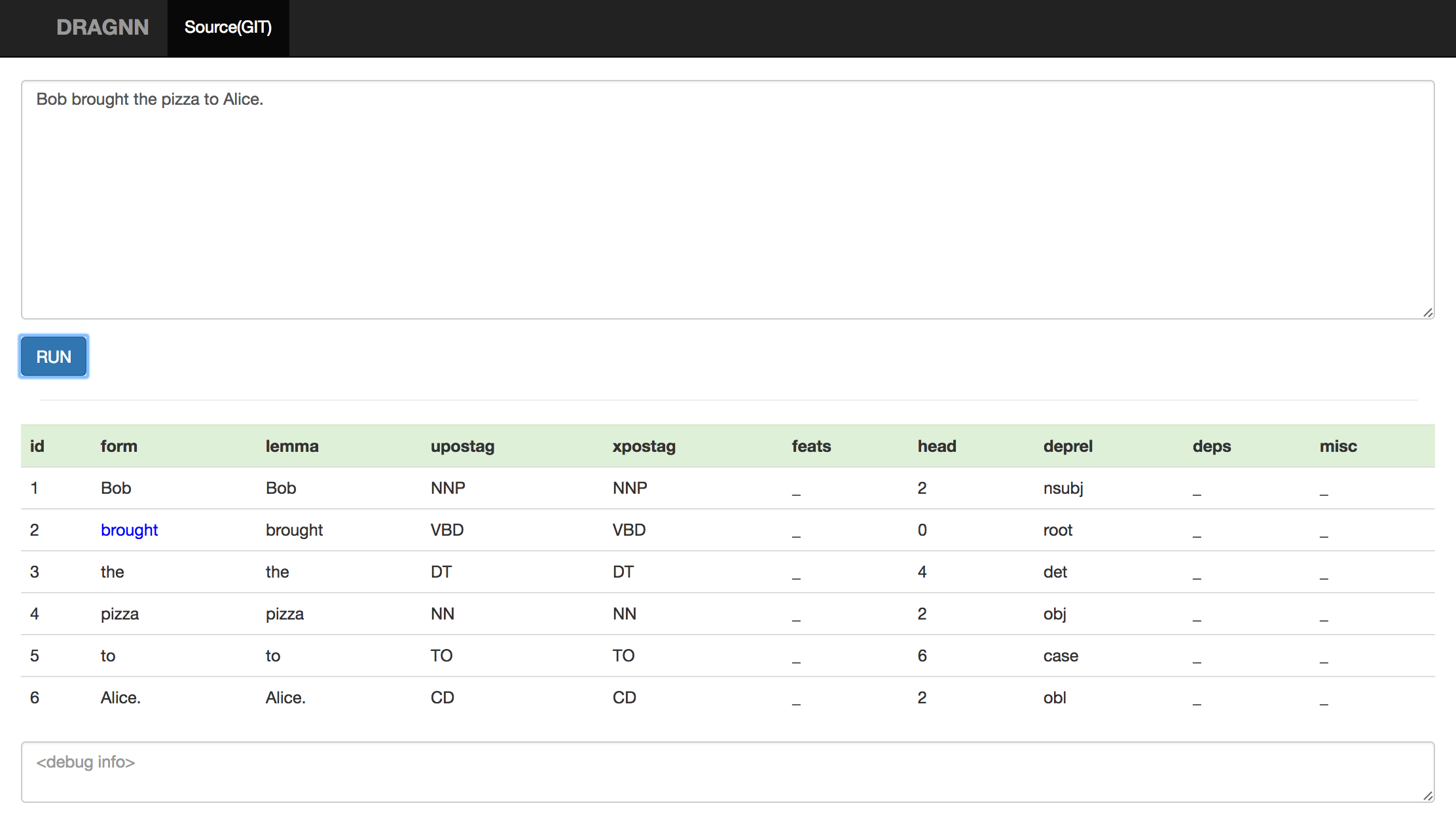

English$ echo “Bob brought the pizza to Alice.” | ./parse.sh

Bob brought the pizza to Alice .

1 Bob Number=Sing|fPOS=PROPN++NNP 0

2 brought Mood=Ind|Tense=Past|VerbForm=Fin|fPOS=VERB++VBD 0

3 the Definite=Def|PronType=Art|fPOS=DET++DT 0

4 pizza Number=Sing|fPOS=NOUN++NN 0

5 to fPOS=ADP++IN 0

6 Alice Number=Sing|fPOS=PROPN++NNP 0

7 . fPOS=PUNCT++. 0

1 Bob PROPN NNP Number=Sing|fPOS=PROPN++NNP 0

2 brought VERB VBD Mood=Ind|Tense=Past|VerbForm=Fin|fPOS=VERB++VBD 0

3 the DET DT Definite=Def|PronType=Art|fPOS=DET++DT 0

4 pizza NOUN NN Number=Sing|fPOS=NOUN++NN 0

5 to ADP IN fPOS=ADP++IN 0

6 Alice PROPN NNP Number=Sing|fPOS=PROPN++NNP 0

7 . PUNCT . fPOS=PUNCT++. 0

1 Bob PROPN NNP Number=Sing|fPOS=PROPN++NNP 2 nsubj

2 brought VERB VBD Mood=Ind|Tense=Past|VerbForm=Fin|fPOS=VERB++VBD 0 ROOT

3 the DET DT Definite=Def|PronType=Art|fPOS=DET++DT 4 det

4 pizza NOUN NN Number=Sing|fPOS=NOUN++NN 2 dobj

5 to ADP IN fPOS=ADP++IN 6 case

6 Alice PROPN NNP Number=Sing|fPOS=PROPN++NNP 2 nmod

7 . PUNCT . fPOS=PUNCT++. 2 punct _

Input: Bob brought the pizza to Alice .

Parse:

brought VERB++VBD ROOT

+— Bob PROPN++NNP nsubj

+— pizza NOUN++NN dobj

| +— the DET++DT det

+— Alice PROPN++NNP nmod

| +— to ADP++IN case

+— . PUNCT++. punct

- downloaded model vs trained model```shell1. downloaded modelLanguage No. tokens POS fPOS Morph UAS LAS-------------------------------------------------------English 25096 90.48% 89.71% 91.30% 84.79% 80.38%2. trained modelINFO:tensorflow:Total processed documents: 2077INFO:tensorflow:num correct tokens: 18634INFO:tensorflow:total tokens: 22395INFO:tensorflow:Seconds elapsed in evaluation: 19.85, eval metric: 83.21%3. where does the difference(84.79% - 83.21%) come from?as mentioned https://research.googleblog.com/2016/08/meet-parseys-cousins-syntax-for-40.htmlthey found good hyperparameters by using MapReduce.for example,the hyperparameters for POS tagger :- POS_PARAMS=128-0.08-3600-0.9-0- decay_steps=3600- hidden_layer_sizes=128- learning_rate=0.08- momentum=0.9

$ cd ../$ pwd/path/to/models/syntaxnet$ bazel build -c opt //examples/dragnn:tutorial_1

# compile$ pwd/path/to/models/syntaxnet$ bazel build -c opt //work/dragnn_examples:write_master_spec$ bazel build -c opt //work/dragnn_examples:train_dragnn$ bazel build -c opt //work/dragnn_examples:inference_dragnn# training$ cd work$ ./train_dragnn.sh -v -v...INFOtraining step: 25300, actual: 25300

INFOtraining step: 25400, actual: 25400

INFOfinished step: 25400, actual: 25400

INFOAnnotating datset: 2002 examples

INFODone. Produced 2002 annotations

INFOTotal num documents: 2002

INFOTotal num tokens: 25148

INFOPOS: 85.63%

INFOUAS: 79.67%

INFOLAS: 74.36%

...# test$ echo "i love this one" | ./test_dragnn.shInput: i love this oneParse:love VBP root+-- i PRP nsubj+-- one CD obj+-- this DT det

# compile$ pwd/path/to/models/syntaxnet$ bazel build -c opt //work/dragnn_examples:write_master_spec$ bazel build -c opt //work/dragnn_examples:train_dragnn$ bazel build -c opt //work/dragnn_examples:inference_dragnn_sejong# training$ cd work# to prepare corpus, please refer to `training parser from Sejong treebank corpus` section.$ ./train_dragnn_sejong.sh -v -v...INFOtraining step: 33100, actual: 33100

INFOtraining step: 33200, actual: 33200

INFOfinished step: 33200, actual: 33200

INFOAnnotating datset: 4114 examples

INFODone. Produced 4114 annotations

INFOTotal num documents: 4114

INFOTotal num tokens: 97002

INFOPOS: 93.95%

INFOUAS: 91.38%

INFOLAS: 87.76%

...# test# after installing konlpy ( http://konlpy.org/ko/v0.4.3/ )$ echo "제주로 가는 비행기가 심한 비바람에 회항했다." | ./test_dragnn_sejong.shINFORead 1 documents

Input: 제주 로 가 는 비행기 가 심하 ㄴ 비바람 에 회항 하 았 다 .Parse:. SF VP+-- 다 EF MOD+-- 았 EP MOD+-- 하 XSA MOD+-- 회항 SN MOD+-- 가 JKS NP_SBJ| +-- 비행기 NNG MOD| +-- 는 ETM VP_MOD| +-- 가 VV MOD| +-- 로 JKB NP_AJT| +-- 제주 MAG MOD+-- 에 JKB NP_AJT+-- 비바람 NNG MOD+-- ㄴ SN MOD+-- 심하 VV NP# it seems that pos tagging results from the dragnn are somewhat incorrect.# so, i replace those to the results from the Komoran tagger.# you can modify 'inference_dragnn_sejong.py' to use the tags from the dragnn.Input: 제주 로 가 는 비행기 가 심하 ㄴ 비바람 에 회항 하 았 다 .Parse:. SF VP+-- 다 EF MOD+-- 았 EP MOD+-- 하 XSV MOD+-- 회항 NNG MOD+-- 가 JKS NP_SBJ| +-- 비행기 NNG MOD| +-- 는 ETM VP_MOD| +-- 가 VV MOD| +-- 로 JKB NP_AJT| +-- 제주 NNG MOD+-- 에 JKB NP_AJT+-- 비바람 NNG MOD+-- ㄴ ETM MOD+-- 심하 VA NP

web api using tornado

```